Problem:

Before this tool existed, researchers and survey reporting teams relied entirely on manual workflows.

Open-ended responses were analyzed using Excel — extracting keywords, creating categories, and reviewing responses by hand to synthesize insights.

This process was time-consuming, inconsistent, and difficult to scale across studies.

However, speed was not the only challenge — maintaining accuracy, consistency, and trust in the analysis was equally critical.

Challenge: Why Existing AI Tools Fell Short

Before building a custom solution, the team experimented with third-party AI platforms to summarize and categorize open-ended survey responses.

While these tools promised efficiency, they introduced new problems that ultimately limited their usefulness in real research workflows:

Low reliability — AI-generated categorizations often required extensive manual review and correction.

Limited customization — third-party tools could not adapt to our specific research workflows or product requirements.

Increased rework — instead of reducing effort, AI outputs sometimes created additional overhead.

Data privacy concerns — sensitive research data raised risks around security, access, and potential information leakage.

As a result, existing AI solutions failed to strike a meaningful balance between efficiency, control, and trust—three qualities essential for research-grade analysis.

Design Principles

To guide the design of the AI-Assisted Survey Response Analysis Tool, we established the following principles—grounded in real research workflows and the limitations of existing AI solutions.

Human-in-the-loop by default

AI should assist, not replace, researcher judgment. The system provides AI-generated starting points while ensuring researchers remain in control to review, edit, and refine results at every stage.

Transparent and editable AI outputs

AI results should never be a black box. Categories and classifications must be visible, understandable, and easy to modify—so researchers can validate and trust the output.

Designed for iteration, not one-time automation

Research analysis is rarely final on the first pass. The experience supports re-categorization and refinement, reflecting how researchers actually work over time.

Customizable to real research workflows

Different studies require different categorization logic. The tool adapts to internal processes and reporting needs, rather than forcing teams into rigid third-party structures.

Privacy-first and secure by design

Survey data is often sensitive. The system prioritizes data protection and minimizes external exposure to reduce security and information leakage risks.

Solution Overview

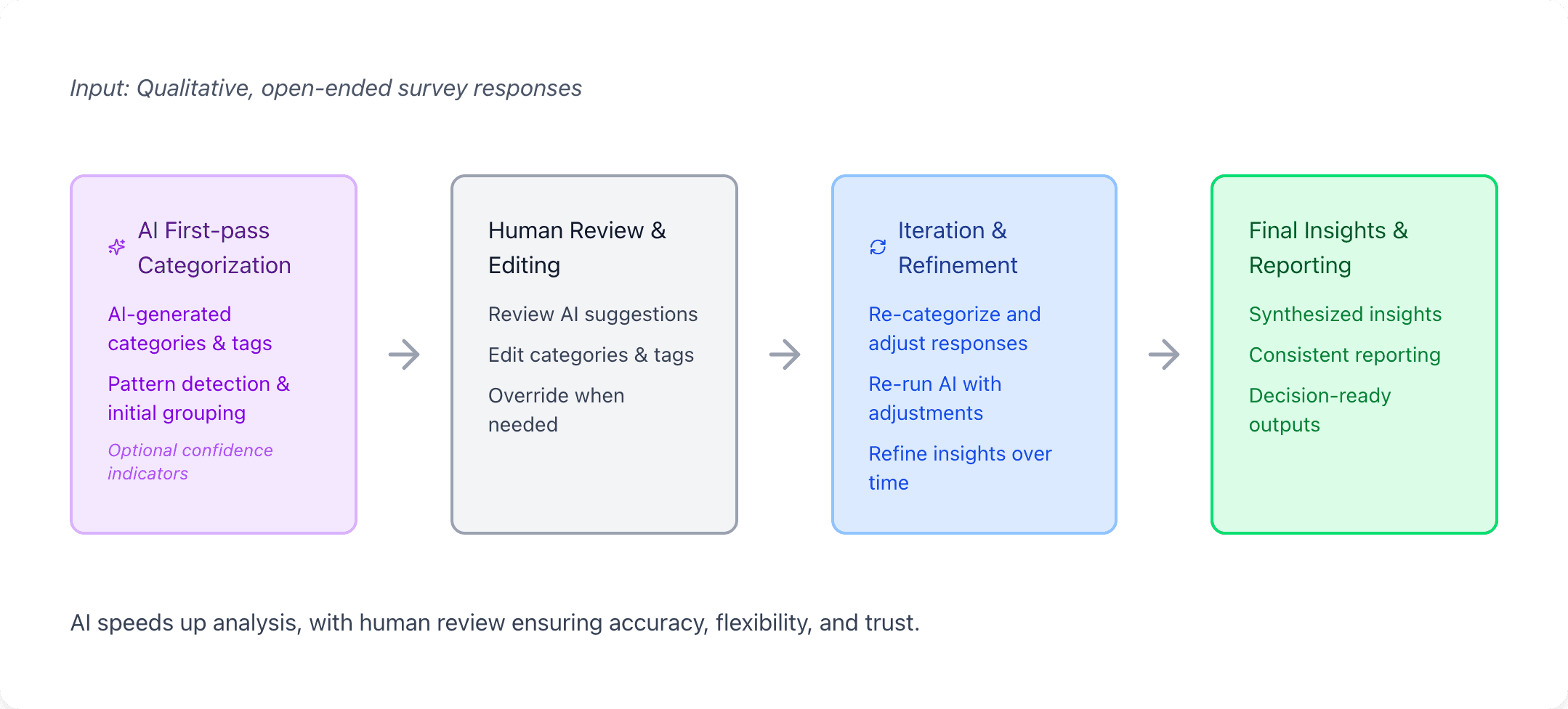

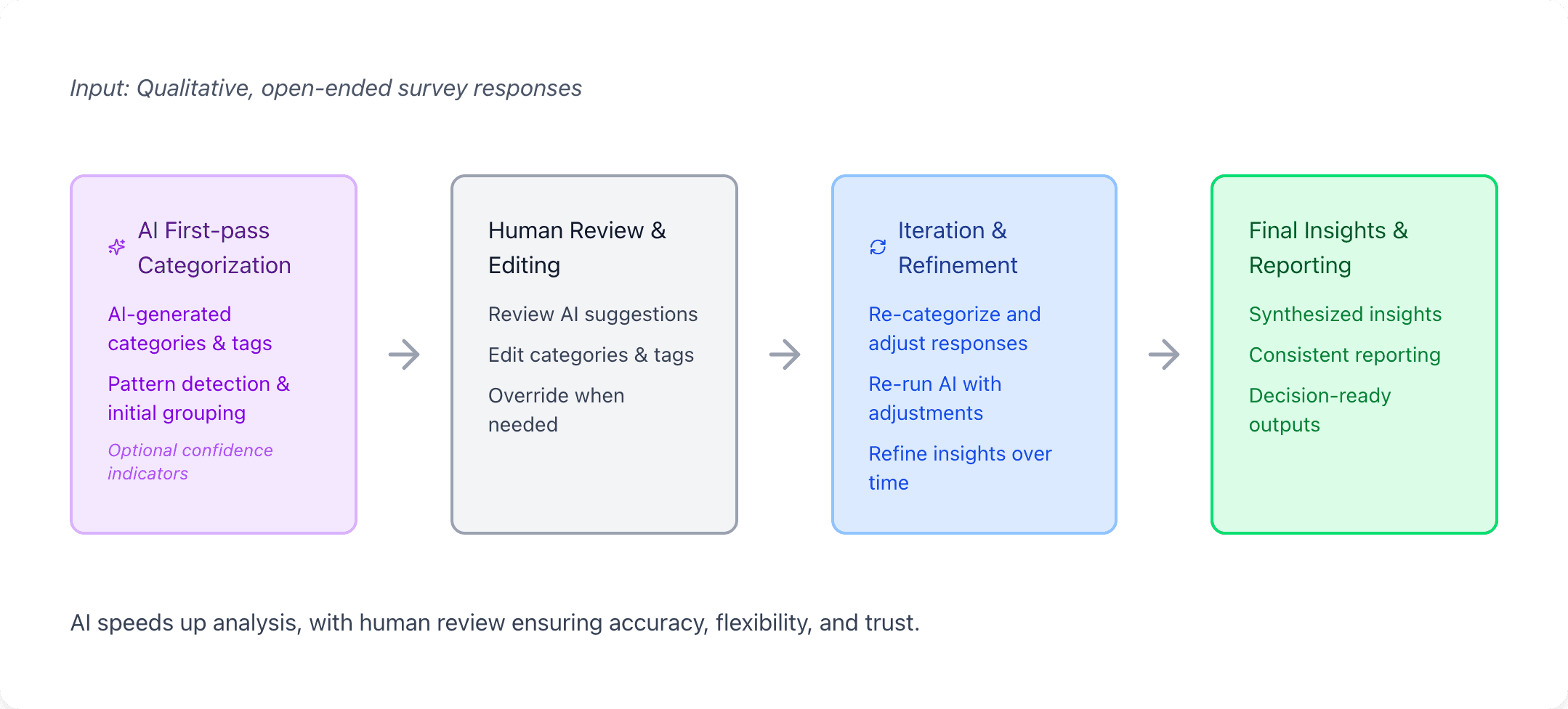

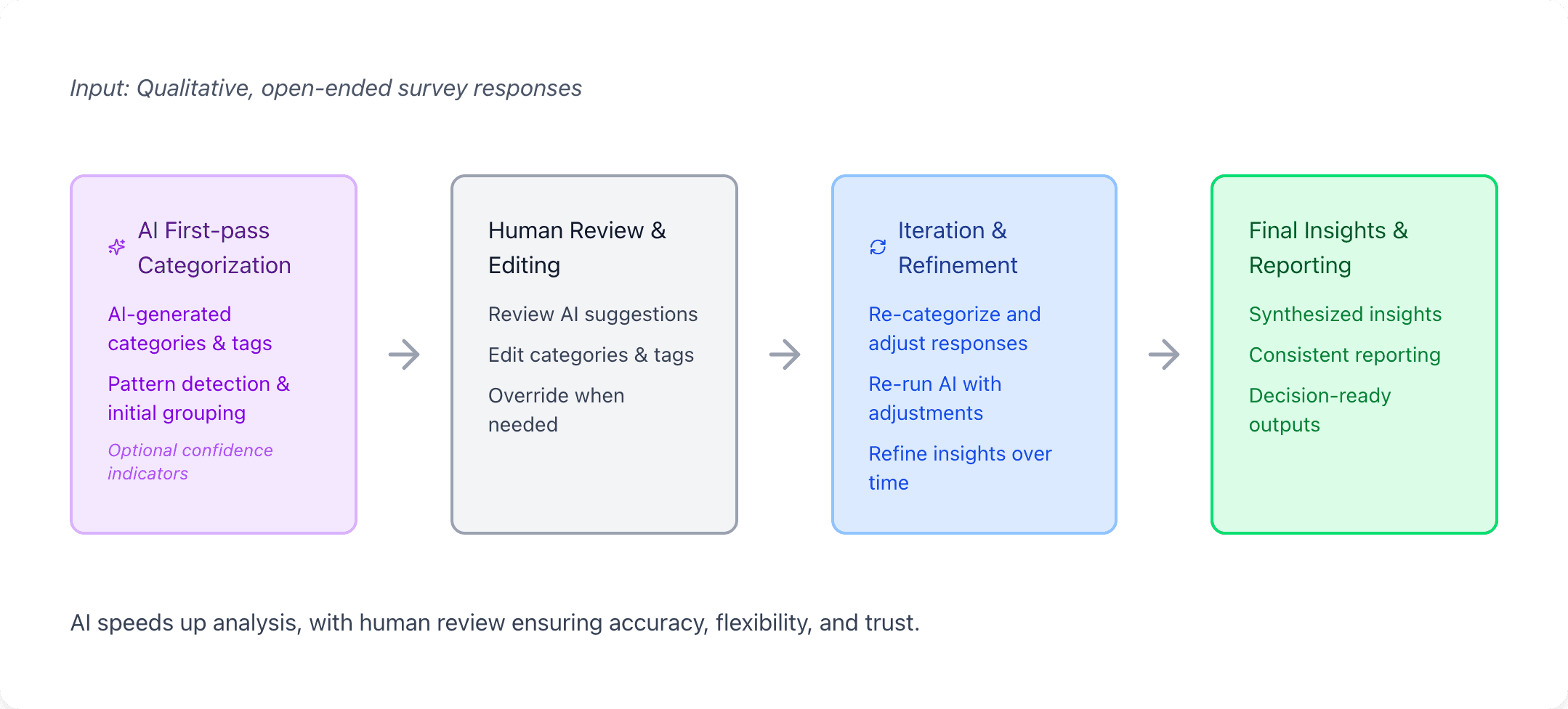

The solution is a custom AI-assisted research tool designed to help teams analyze and categorize open-ended survey responses with greater speed, consistency, and confidence.

Rather than fully automating the process, the system positions AI as a first-pass assistant. AI generates initial categories and tags based on response patterns, reducing manual effort. Researchers then review, edit, and refine these results—maintaining full ownership over the final insights. The experience is built around real research workflows:

• AI provides an initial analytical structure, not a final answer

• Researchers can review, modify, and re-categorize at any stage

• The system supports iteration as understanding evolves

By combining AI efficiency with human judgment, the tool transforms a previously manual, Excel-based workflow into a scalable and trustworthy research system—without compromising accuracy, customization, or data security.

Problem:

Before this tool existed, researchers and survey reporting teams relied entirely on manual workflows.

Open-ended responses were analyzed using Excel — extracting keywords, creating categories, and reviewing responses by hand to synthesize insights.

This process was time-consuming, inconsistent, and difficult to scale across studies.

However, speed was not the only challenge — maintaining accuracy, consistency, and trust in the analysis was equally critical.

Challenge: Why Existing AI Tools Fell Short

Before building a custom solution, the team experimented with third-party AI platforms to summarize and categorize open-ended survey responses.

While these tools promised efficiency, they introduced new problems that ultimately limited their usefulness in real research workflows:

Low reliability — AI-generated categorizations often required extensive manual review and correction.

Limited customization — third-party tools could not adapt to our specific research workflows or product requirements.

Increased rework — instead of reducing effort, AI outputs sometimes created additional overhead.

Data privacy concerns — sensitive research data raised risks around security, access, and potential information leakage.

As a result, existing AI solutions failed to strike a meaningful balance between efficiency, control, and trust—three qualities essential for research-grade analysis.

Design Principles

To guide the design of the AI-Assisted Survey Response Analysis Tool, we established the following principles—grounded in real research workflows and the limitations of existing AI solutions.

Human-in-the-loop by default

AI should assist, not replace, researcher judgment. The system provides AI-generated starting points while ensuring researchers remain in control to review, edit, and refine results at every stage.

Transparent and editable AI outputs

AI results should never be a black box. Categories and classifications must be visible, understandable, and easy to modify—so researchers can validate and trust the output.

Designed for iteration, not one-time automation

Research analysis is rarely final on the first pass. The experience supports re-categorization and refinement, reflecting how researchers actually work over time.

Customizable to real research workflows

Different studies require different categorization logic. The tool adapts to internal processes and reporting needs, rather than forcing teams into rigid third-party structures.

Privacy-first and secure by design

Survey data is often sensitive. The system prioritizes data protection and minimizes external exposure to reduce security and information leakage risks.

Solution Overview

The solution is a custom AI-assisted research tool designed to help teams analyze and categorize open-ended survey responses with greater speed, consistency, and confidence.

Rather than fully automating the process, the system positions AI as a first-pass assistant. AI generates initial categories and tags based on response patterns, reducing manual effort. Researchers then review, edit, and refine these results—maintaining full ownership over the final insights. The experience is built around real research workflows:

• AI provides an initial analytical structure, not a final answer

• Researchers can review, modify, and re-categorize at any stage

• The system supports iteration as understanding evolves

By combining AI efficiency with human judgment, the tool transforms a previously manual, Excel-based workflow into a scalable and trustworthy research system—without compromising accuracy, customization, or data security.

Key Design Decision

Why Separate Single Edit and Bulk Edit

Through user testing sessions with the Report and Access teams, I observed that researchers consistently operated in two very different mental modes.

At times, they needed to carefully review AI suggestions one response at a time—checking nuance, context, and intent. At other times, they wanted to apply the same change across dozens or even hundreds of responses after identifying a pattern.

Early explorations attempted to support both behaviors within a single interface. However, this quickly introduced confusion, increased cognitive load, and raised the risk of accidental bulk changes.

The Decision

I intentionally separated the experience into Single Edit Mode and Bulk Edit Mode, rather than combining both into an overloaded workflow.

Single Edit Mode supports precision and confidence, allowing researchers to validate and fine-tune AI suggestions at the individual response level.

Bulk Edit Mode supports speed and scale, enabling users to apply consistent changes across multiple responses efficiently and safely.

Why This Matters

This separation aligns the interface with users’ mental models, rather than forcing them to adapt to the system.

It reduces error risk by making bulk actions an explicit, intentional mode.

It improves efficiency by optimizing each mode for a specific task.

It builds trust in AI-assisted workflows by ensuring users always understand the scope and impact of their actions.

By designing around distinct user intentions instead of a one-size-fits-all interaction, the experience feels both powerful and safe—especially critical in AI-driven environments where mistakes can scale quickly.

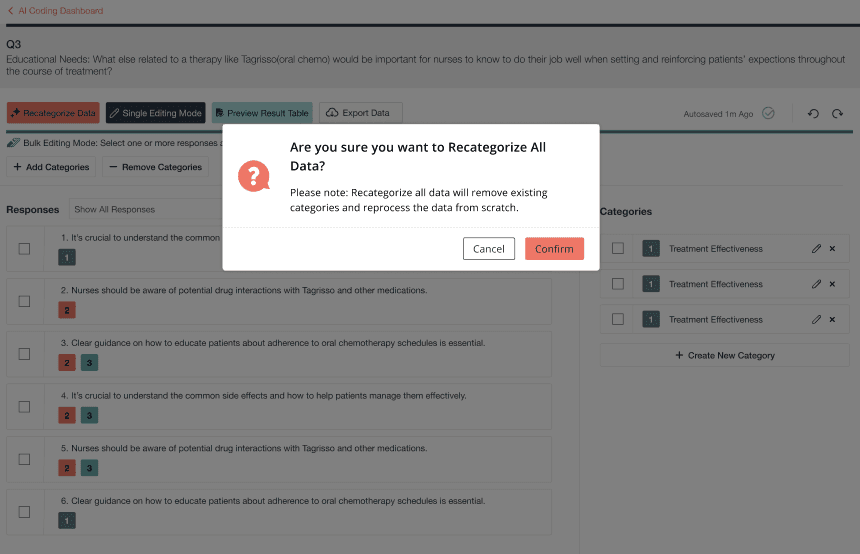

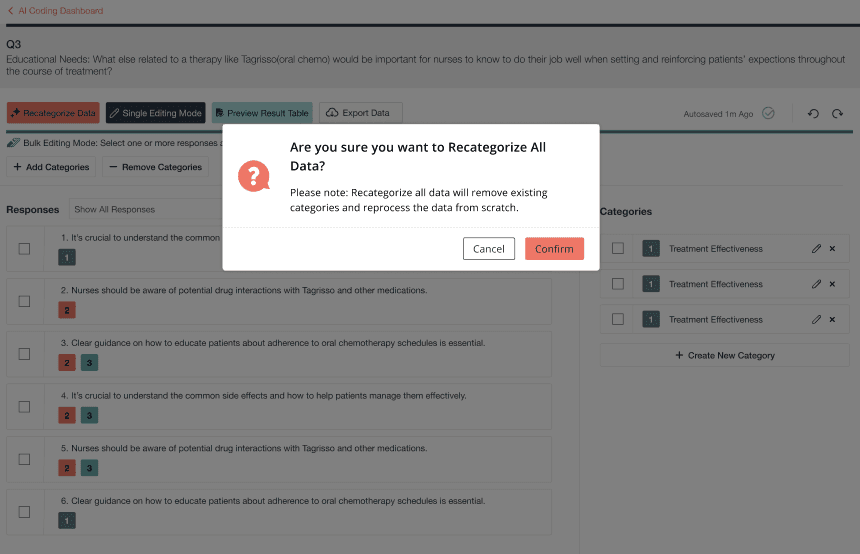

Why Re-Categorization Requires Strong Warnings

Re-categorization is one of the most powerful—and risky—actions in the AI-Assisted Survey Response Analysis Tool.

During usability testing, multiple researchers hesitated before re-categorizing, unsure what would happen to their previous work.

From a researcher’s perspective, re-running categorization may feel like a simple reset. In reality, it removes all existing manual adjustments that researchers have carefully applied to the data—a single action that can overwrite hours of thoughtful review.

AI-assisted analysis also introduces uncertainty. Re-processing the same set of responses may produce different results, and once manual changes are cleared, they cannot be easily recovered.

The Design Question: How might we let researchers benefit from AI assistance without allowing irreversible actions to undo their work silently?

The Decision

I designed explicit, high-visibility warning states before re-categorization:

Clear language explaining exactly what will be removed

A deliberate confirmation step to prevent accidental actions

A visible progress state during re-processing

A clear success message once the process completes

Why This Matters

These warnings act as guardrails, not friction.

They protect researchers from unintended data loss

They make AI system behavior transparent and predictable

They build confidence to experiment, knowing the system will not surprise them

By treating re-categorization as a high-stakes decision, rather than a casual action, the experience establishes long-term trust. Researchers remain in control, even when underlying AI behavior is complex or irreversible.

📸 Re-categorization warning modal, loading state, and success confirmation

By pairing strong warnings with transparent system feedback, researchers remain confident and in control—even when working with irreversible AI-driven operations.

Why Results Preview Is Critical Before Export

During testing, I noticed that researchers rarely felt confident immediately after finishing categorization.

What they really wanted to know wasn’t “Did I finish tagging?” — but “Do these results actually make sense?”

Without a way to validate outcomes, researchers had to export data blindly and only discover issues later, often after the data had already been shared.

The Challenge

AI-assisted analysis can produce results quickly, but speed alone doesn’t equal confidence.

Researchers needed a way to sanity-check patterns, spot gaps, and assess coverage before committing to an export.The Decision

The Decision

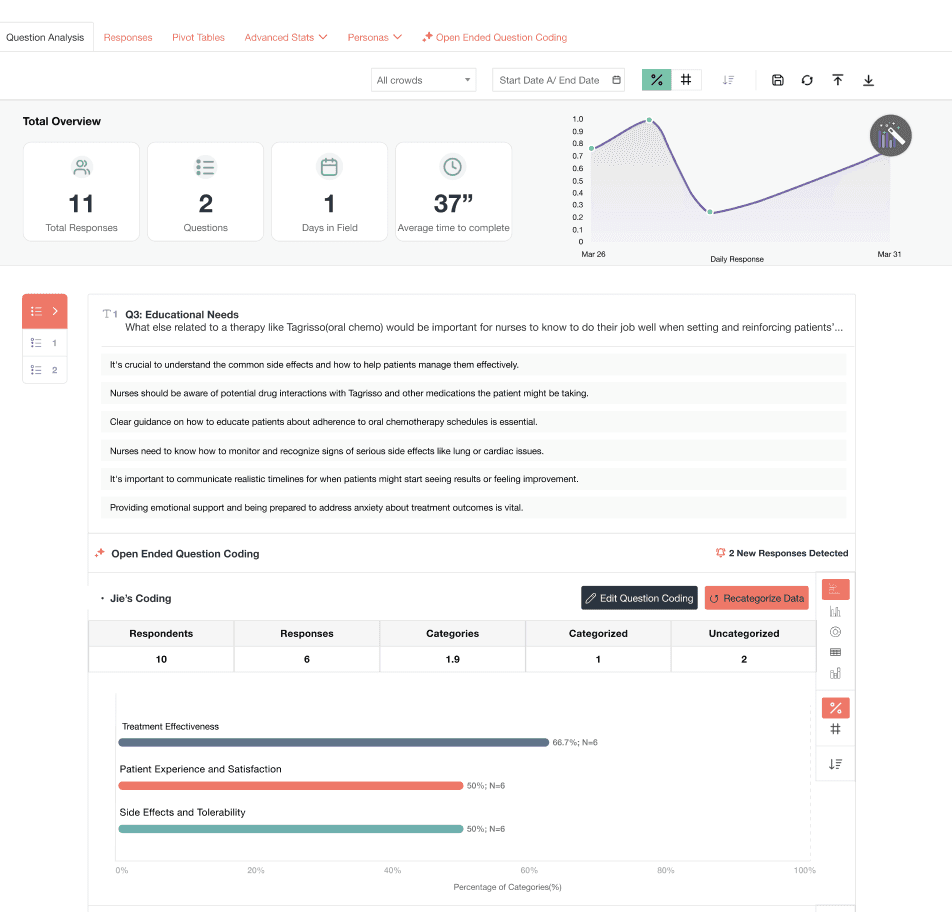

I introduced a Results Preview that summarizes categorization outcomes before export:

High-level metrics showing categorized vs. uncategorized responses

A bar chart visualizing category distribution and relative weight

A lightweight preview that supports quick validation without interrupting the workflow

Why This Matters

Results Preview shifts the experience from editing data to evaluating insights.

By surfacing patterns before export, researchers can validate quality, catch issues early, and make informed decisions—reducing rework and increasing confidence in AI-assisted analysis.

📸 Results preview with metrics and category distribution